mirror of

https://github.com/netbox-community/netbox.git

synced 2026-02-26 02:35:12 +01:00

NetBox mixes authors of modifications in the changelog when there are a lot of concurrent modifications #5589

Closed

opened 2025-12-29 19:29:48 +01:00 by adam

·

13 comments

No Branch/Tag Specified

main

21524-invlaid-paths-exception

21518-cf-decimal-zero

21356-etags

feature

20787-spectacular

21477-extend-graphql-api-filters-for-cables

21331-deprecate-querystring-tag

21304-deprecate-housekeeping-command

21481-facility-id-doesnt-show-in-rack-page

21429-cable-create-add-another-does-not-carry-over-termination

21364-swagger

20442-callable-audit

feature-ip-prefix-link

20923-dcim-templates

20911-dropdown-3

fix_module_substitution

21203-q-attr-denorm

21160-filterset

21118-site

20911-dropdown-2

21102-fix-graphiql-explorer

20044-elevation-stuck-lightmode

v4.5-beta1-release

20068-import-moduletype-attrs

20766-fix-german-translation-code-literals

20378-del-script

7604-filter-modifiers-v3

circuit-swap

12318-case-insensitive-uniqueness

20637-improve-device-q-filter

20660-script-load

19724-graphql

20614-update-ruff

14884-script

02496-max-page

19720-macaddress-interface-generic-relation

19408-circuit-terminations-export-templates

20203-openapi-check

fix-19669-api-image-download

7604-filter-modifiers

19275-fixes-interface-bulk-edit

fix-17794-get_field_value_return_list

11507-show-aggregate-and-rir-on-api

9583-add_column_specific_search_field_to_tables

v4.5.3

v4.5.2

v4.5.1

v4.5.0

v4.4.10

v4.4.9

v4.5.0-beta1

v4.4.8

v4.4.7

v4.4.6

v4.4.5

v4.4.4

v4.4.3

v4.4.2

v4.4.1

v4.4.0

v4.3.7

v4.4.0-beta1

v4.3.6

v4.3.5

v4.3.4

v4.3.3

v4.3.2

v4.3.1

v4.3.0

v4.2.9

v4.3.0-beta2

v4.2.8

v4.3.0-beta1

v4.2.7

v4.2.6

v4.2.5

v4.2.4

v4.2.3

v4.2.2

v4.2.1

v4.2.0

v4.1.11

v4.1.10

v4.1.9

v4.1.8

v4.2-beta1

v4.1.7

v4.1.6

v4.1.5

v4.1.4

v4.1.3

v4.1.2

v4.1.1

v4.1.0

v4.0.11

v4.0.10

v4.0.9

v4.1-beta1

v4.0.8

v4.0.7

v4.0.6

v4.0.5

v4.0.3

v4.0.2

v4.0.1

v4.0.0

v3.7.8

v3.7.7

v4.0-beta2

v3.7.6

v3.7.5

v4.0-beta1

v3.7.4

v3.7.3

v3.7.2

v3.7.1

v3.7.0

v3.6.9

v3.6.8

v3.6.7

v3.7-beta1

v3.6.6

v3.6.5

v3.6.4

v3.6.3

v3.6.2

v3.6.1

v3.6.0

v3.5.9

v3.6-beta2

v3.5.8

v3.6-beta1

v3.5.7

v3.5.6

v3.5.5

v3.5.4

v3.5.3

v3.5.2

v3.5.1

v3.5.0

v3.4.10

v3.4.9

v3.5-beta2

v3.4.8

v3.5-beta1

v3.4.7

v3.4.6

v3.4.5

v3.4.4

v3.4.3

v3.4.2

v3.4.1

v3.4.0

v3.3.10

v3.3.9

v3.4-beta1

v3.3.8

v3.3.7

v3.3.6

v3.3.5

v3.3.4

v3.3.3

v3.3.2

v3.3.1

v3.3.0

v3.2.9

v3.2.8

v3.3-beta2

v3.2.7

v3.3-beta1

v3.2.6

v3.2.5

v3.2.4

v3.2.3

v3.2.2

v3.2.1

v3.2.0

v3.1.11

v3.1.10

v3.2-beta2

v3.1.9

v3.2-beta1

v3.1.8

v3.1.7

v3.1.6

v3.1.5

v3.1.4

v3.1.3

v3.1.2

v3.1.1

v3.1.0

v3.0.12

v3.0.11

v3.0.10

v3.1-beta1

v3.0.9

v3.0.8

v3.0.7

v3.0.6

v3.0.5

v3.0.4

v3.0.3

v3.0.2

v3.0.1

v3.0.0

v2.11.12

v3.0-beta2

v2.11.11

v2.11.10

v3.0-beta1

v2.11.9

v2.11.8

v2.11.7

v2.11.6

v2.11.5

v2.11.4

v2.11.3

v2.11.2

v2.11.1

v2.11.0

v2.10.10

v2.10.9

v2.11-beta1

v2.10.8

v2.10.7

v2.10.6

v2.10.5

v2.10.4

v2.10.3

v2.10.2

v2.10.1

v2.10.0

v2.9.11

v2.10-beta2

v2.9.10

v2.10-beta1

v2.9.9

v2.9.8

v2.9.7

v2.9.6

v2.9.5

v2.9.4

v2.9.3

v2.9.2

v2.9.1

v2.9.0

v2.9-beta2

v2.8.9

v2.9-beta1

v2.8.8

v2.8.7

v2.8.6

v2.8.5

v2.8.4

v2.8.3

v2.8.2

v2.8.1

v2.8.0

v2.7.12

v2.7.11

v2.7.10

v2.7.9

v2.7.8

v2.7.7

v2.7.6

v2.7.5

v2.7.4

v2.7.3

v2.7.2

v2.7.1

v2.7.0

v2.6.12

v2.6.11

v2.6.10

v2.6.9

v2.7-beta1

Solcon-2020-01-06

v2.6.8

v2.6.7

v2.6.6

v2.6.5

v2.6.4

v2.6.3

v2.6.2

v2.6.1

v2.6.0

v2.5.13

v2.5.12

v2.6-beta1

v2.5.11

v2.5.10

v2.5.9

v2.5.8

v2.5.7

v2.5.6

v2.5.5

v2.5.4

v2.5.3

v2.5.2

v2.5.1

v2.5.0

v2.4.9

v2.5-beta2

v2.4.8

v2.5-beta1

v2.4.7

v2.4.6

v2.4.5

v2.4.4

v2.4.3

v2.4.2

v2.4.1

v2.4.0

v2.3.7

v2.4-beta1

v2.3.6

v2.3.5

v2.3.4

v2.3.3

v2.3.2

v2.3.1

v2.3.0

v2.2.10

v2.3-beta2

v2.2.9

v2.3-beta1

v2.2.8

v2.2.7

v2.2.6

v2.2.5

v2.2.4

v2.2.3

v2.2.2

v2.2.1

v2.2.0

v2.1.6

v2.2-beta2

v2.1.5

v2.2-beta1

v2.1.4

v2.1.3

v2.1.2

v2.1.1

v2.1.0

v2.0.10

v2.1-beta1

v2.0.9

v2.0.8

v2.0.7

v2.0.6

v2.0.5

v2.0.4

v2.0.3

v2.0.2

v2.0.1

v2.0.0

v2.0-beta3

v1.9.6

v1.9.5

v2.0-beta2

v1.9.4-r1

v1.9.3

v2.0-beta1

v1.9.2

v1.9.1

v1.9.0-r1

v1.8.4

v1.8.3

v1.8.2

v1.8.1

v1.8.0

v1.7.3

v1.7.2-r1

v1.7.1

v1.7.0

v1.6.3

v1.6.2-r1

v1.6.1-r1

1.6.1

v1.6.0

v1.5.2

v1.5.1

v1.5.0

v1.4.2

v1.4.1

v1.4.0

v1.3.2

v1.3.1

v1.3.0

v1.2.2

v1.2.1

v1.2.0

v1.1.0

v1.0.7-r1

v1.0.7

v1.0.6

v1.0.5

v1.0.4

v1.0.3-r1

v1.0.3

1.0.0

Labels

Clear labels

beta

breaking change

complexity: high

complexity: low

complexity: medium

needs milestone

netbox

pending closure

plugin candidate

pull-request

severity: high

severity: low

severity: medium

status: accepted

status: backlog

status: blocked

status: duplicate

status: needs owner

status: needs triage

status: revisions needed

status: under review

topic: GraphQL

topic: Internationalization

topic: OpenAPI

topic: UI/UX

topic: cabling

topic: event rules

topic: htmx navigation

topic: industrialization

topic: migrations

topic: plugins

topic: scripts

topic: templating

topic: testing

type: bug

type: deprecation

type: documentation

type: feature

type: housekeeping

type: translation

Mirrored from GitHub Pull Request

No Label

type: bug

Milestone

No items

No Milestone

Projects

Clear projects

No project

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: starred/netbox#5589

Reference in New Issue

Block a user

Blocking a user prevents them from interacting with repositories, such as opening or commenting on pull requests or issues. Learn more about blocking a user.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Originally created by @drygdryg on GitHub (Oct 30, 2021).

NetBox version

v3.0.8

Python version

3.9

Steps to Reproduce

netbox.sql) to your NetBox instance according the manual.test_case.py: configureNETBOX_URLaccording to your NetBox instance.test_case.py. You need to install the httpx module to run if it's not installed:pip install httpx.username: admin

password: admin

http://netbox.local/extras/changelog/).Expected Behavior

According to the changelog, each user should change his device based on this logic:

test_user1updatesTest router 1test_user2updatesTest router 2test_user3updatesTest router 3etc.

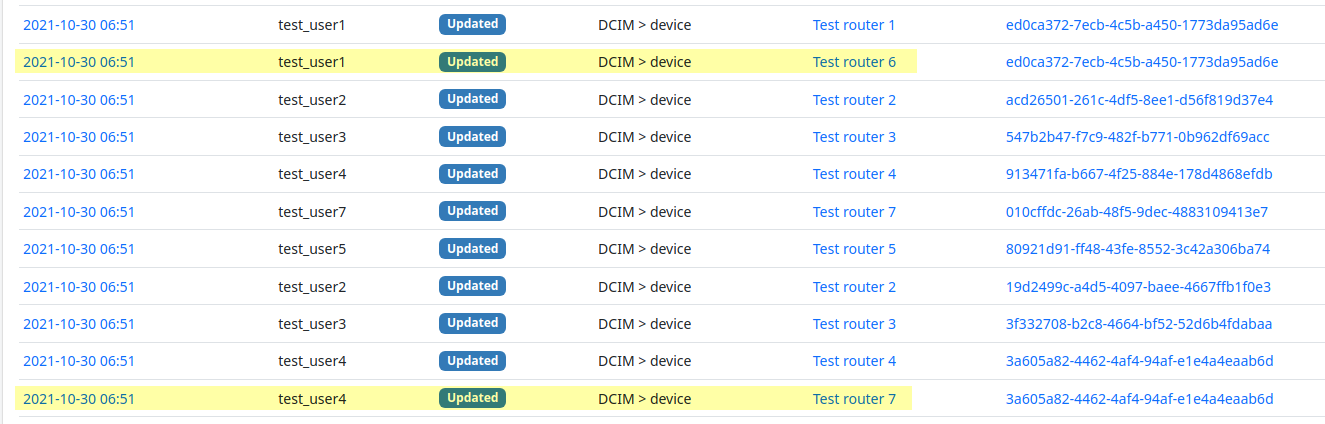

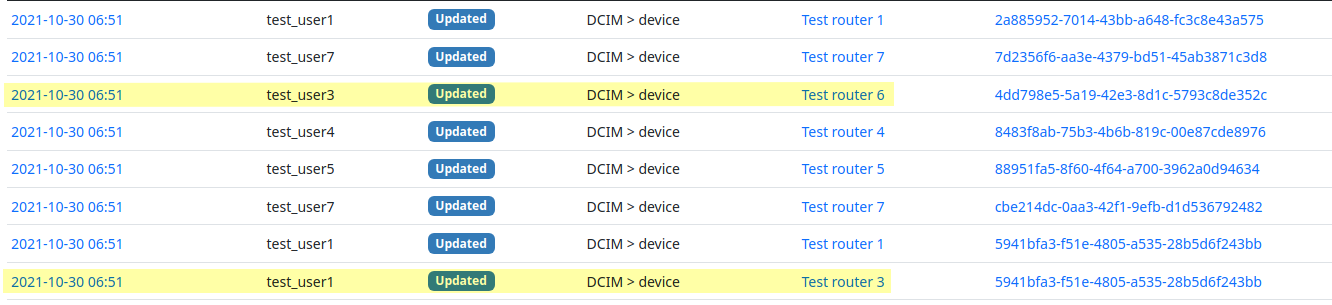

Observed Behavior

Users are mixed in the changelog. Some examples:

@kkthxbye-code commented on GitHub (Oct 30, 2021):

Probably same issue as #7657, #5142, #5609 - There's a race condition in ObjectChange handling when using an application server running multiple workers in threads.

I don't really know a lot about django, but isn't this iffy? I'm not sure that is thread safe.

0b0ab9277c/netbox/extras/context_managers.py (L25-L38)If you just want a workaround, try running gunicorn with threads = 1.

@tyler-8 commented on GitHub (Oct 31, 2021):

@drygdryg Can you try this branch and see if it resolves the issue for you? It seems to fix it on my dev instance. https://github.com/tyler-8/netbox/tree/objectchange_threadsafe

[Edit] Hmm... maybe not, looked like it was working for a few test runs. Back to the drawing board I suppose.

@tyler-8 commented on GitHub (Nov 1, 2021):

I made a new update, try the https://github.com/tyler-8/netbox/tree/objectchange_threadsafe branch now.

@drygdryg commented on GitHub (Nov 1, 2021):

@tyler-8 I tested this with 9 workers and 3 threads, and it solved the issue for me, thanks.

@tyler-8 commented on GitHub (Nov 1, 2021):

Try fewer workers and more threads; such as 4 and 4.

For testing this, we want the threads to be used more heavily and with 9 workers (processes) against your test script there is barely enough load for threads.

@DanSheps commented on GitHub (Nov 1, 2021):

Closing this in favour of #7657

@tyler-8 commented on GitHub (Nov 1, 2021):

I'm experimenting a slightly different track with this one now @DanSheps - this particular issue might be more due to using non-unique values for

dispatch_uidin the signal.connect()methods when usinggunicornthreading. Related to the other issue, but possibly different enough to warrant another solution.b5e8157700/netbox/extras/context_managers.py (L26)would become

@tyler-8 commented on GitHub (Nov 1, 2021):

@drygdryg - can you try again using this branch instead? https://github.com/tyler-8/netbox/tree/objectchange_dispatch_uids

I'm seeing good results with your script without the use of

threading.Lock()like in the previous branch@DanSheps commented on GitHub (Nov 1, 2021):

@tyler-8 since this one might be semi-unrelated, I will re-open this for you

@tyler-8 commented on GitHub (Nov 1, 2021):

Boy this is frustrating to troubleshoot and test, lol.

Initially, using truly unique

dispatch_uidseemed to work through a couple test runs of thetest_case.pyscript, but now I'm seeing the user-mixups. On the other hand the original branch https://github.com/tyler-8/netbox/tree/objectchange_threadsafe is still solving the issue consistently.@tyler-8 commented on GitHub (Nov 1, 2021):

Benchmarks using a modified

test_case.py(ThreadPoolExecutor instead of ProcessPoolExecutor)@tyler-8 commented on GitHub (Nov 1, 2021):

@DanSheps After tinkering with this issue more today (and digging up the old issues that had similar errors/behavior), I think it is in fact the same issue. You can close this one again (sorry :P ) and I'll post my updates in #7657

@jeremystretch commented on GitHub (Nov 3, 2021):

No worries! We'll close this one for now, and revisit if the eventual fix for #7657 is determined not to resolve this particular issue as well.